I have a dimension with 13000+ elements. When it is placed on filters toolbar, the page becomes unresponsive or very slow. Chrome consumes over 1gb of ram. Is there anything I can do to improve this?

Hi @aeremenko

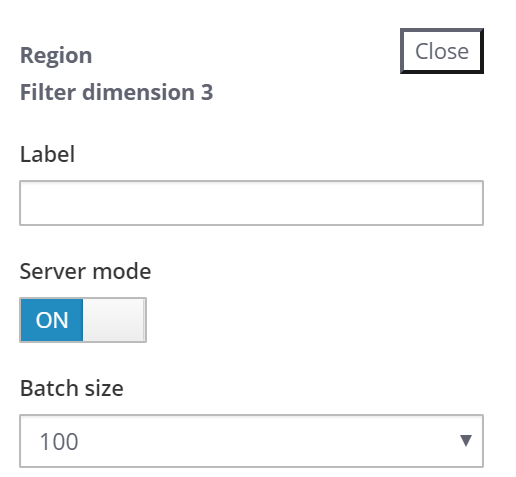

If a large dimension is on filter then you can improve performance a lot by selecting “server mode” for the dimension. When Server mode is on you also need to set batch size for the number of elements to be fetched at a time to display in the subnm list.

than the rendering of the subnm for the whole dimension.

E.g.

Note

You should only use server mode for filter dimensions with > 1000 elements (and then only if there is a performance benefit) as for smaller dimensions / smaller subsets the extra server round trip is more overhead

When I select server mode on, in the subset picklist there are no hierarchies and it will only display the number of elements specified in batch size - I would expect it will load extra ones as I scroll the list.

If I select an element through subset editor, it may or may not show up in the picklist.

Hi @aeremenko,

Server mode doesn’t work that way, you need to type in text to filter the list, it always returns a maximum number of elements and has no hierarchy.

@aeremenko Tim is right - what we are doing there is pretty much the same that is happening in a Canvas subnm in server-mode, we only tweaked the subnm directive slightly for look and feel in the UX but the guts are still the same.

Maybe you can try cascading filters where you select a consolidation (like a product group or whatever the dimension is you are looking at) first and then use an MDX placeholder in the second dropdown that only shows the children of the product group. Don’t know whether that is an option for your use case.

We are on a similar situation right now, we are trying to do pagination on Row being displayed, but require some filtering from 18 different attributes and also multiple value selection on the same attribute.

Is there anyway we can leverage server mode to speed up the performance, currently if filtering the dimension on UX, it is taking like 5~20 minutes to return data if say >3 filters been applied, the time varies depending how complicated the search is, in SQL environment, this kind of complicated filtering just took roughly 5 seconds on a bad day, as the returned number of elements maybe less than 10 elements or 10 rows.

Right now we are building a custom widget + MDX to calculate the full number of elements to handle pagination and possibly try speed up the subset generation, but wanted to see if there is anything we can already leverage before we do more complicated stuff on it.

Server mode is really about improving the performance when you have a really large subset of members applied to a filter dimension so the whole list doesn’t get rendered in the SUBNM list (but via wildcard filter you can still select any member even if it isn’t in the current batch displayed in the list).

It sounds like here you have a really large starting set but after applying filters the final set (that you want to display in the SUBNM is quite small, or could be quite small). So I don’t think server mode will help.

I’m guessing that the 5 -20 minutes you are talking about is nothing to do with UX performance but is the slowness of executing the MDX filters?? MDX can be really slow sometimes. I would suggest having a starting form or process popup to do the filtering and do it via TI which should be much faster. And rather than using MDX to drive the final list in the filter make it a named static subset which the TI updates (or even a separate helper dimension where you just wipe out and rebuild the elements on each process run).

Yes, the dimension is not really large, I am talking about 30k elements on the dimension.

MDX is very likely the problem, it is actually much faster if I don’t apply any filtering.

But thanks for the insight, I guess I know what I should do to improve the performance.

Thanks